ECoDepth

Effective Conditioning of Diffusion Models for Monocular Depth Estimation

CVPR 2024

Abstract

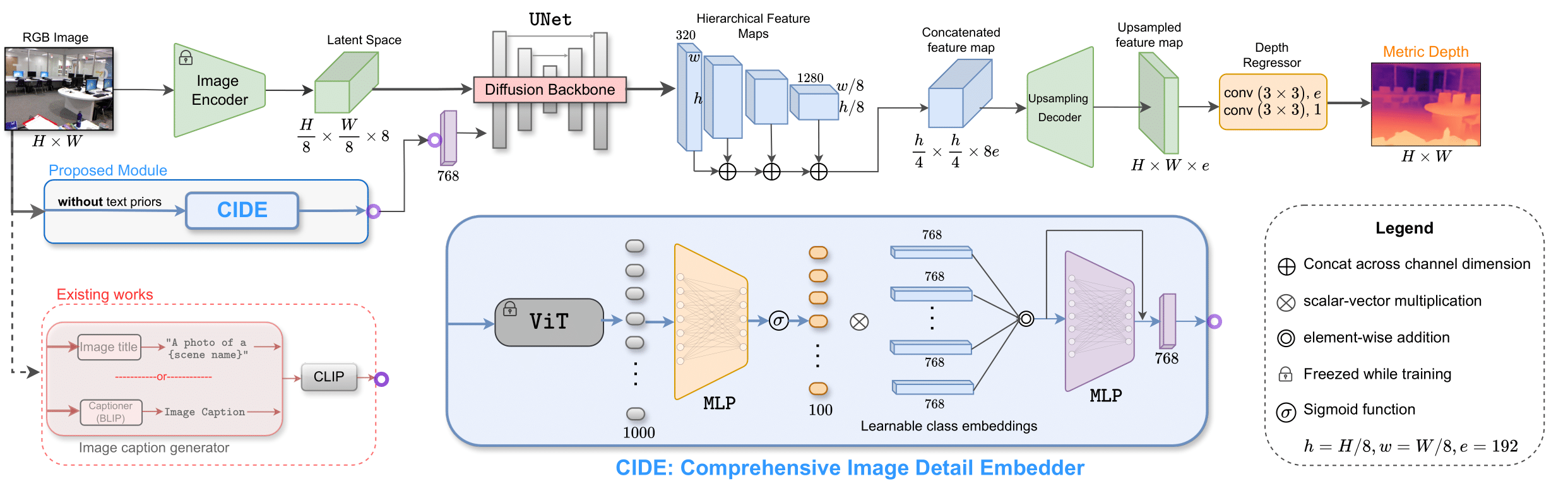

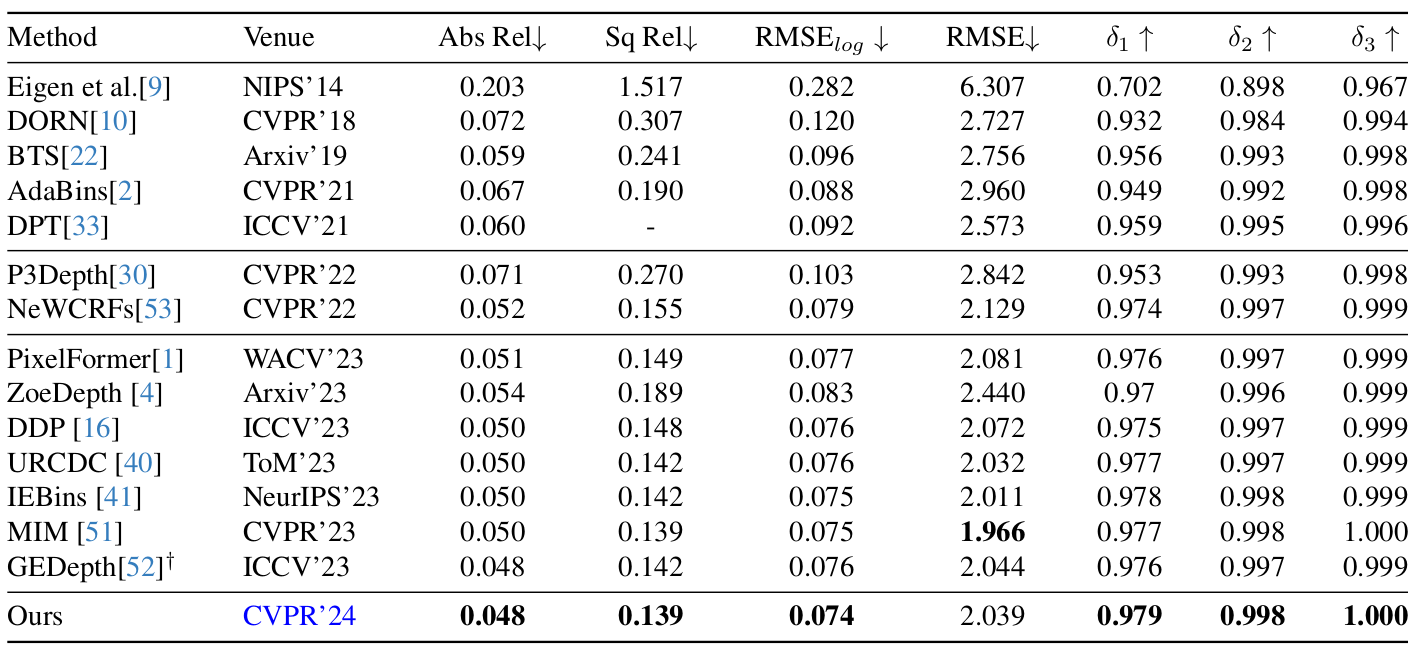

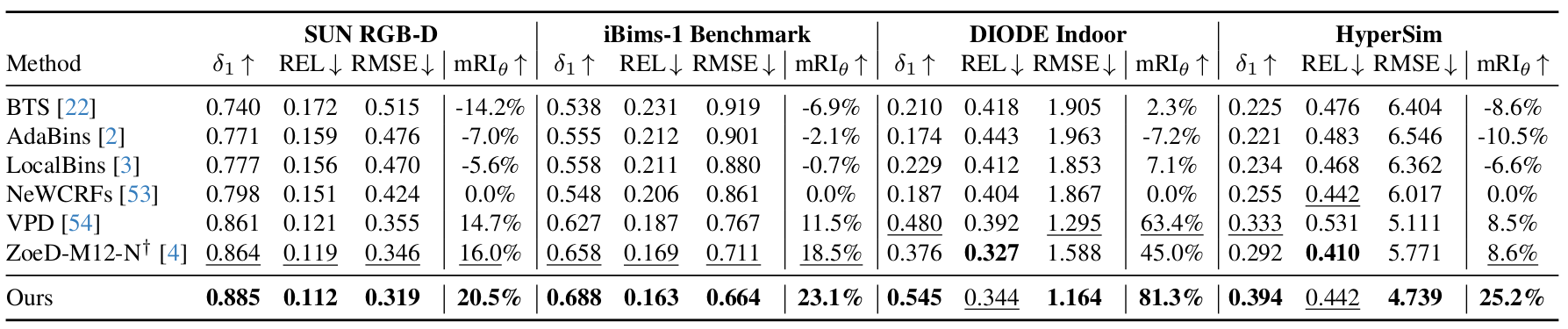

In the absence of parallax cues, a learning based single image depth estimation (SIDE) model relies heavily on shading and contextual cues in the image. While this simplicity is attractive, it is necessary to train such models on large and varied datasets, which are difficult to capture. It has been shown that using embeddings from pretrained foundational models, such as CLIP, improves zero shot transfer in several applications. Taking inspiration from this, in our paper we explore the use of global image priors generated from a pre-trained ViT model to provide more detailed contextual information. We argue that the embedding vector from a ViT model, pre-trained on a large dataset, captures greater relevant information for SIDE than the usual route of generating pseudo image captions, followed by CLIP based text embeddings. Based on the idea, we propose a new SIDE model using a diffusion backbone conditioned on ViT embeddings. Our proposed design establishes a new state-of-the-art (SOTA) for SIDE on NYU Depth v2 dataset, achieving Abs Rel error of 0.059(14% improvement) compared to 0.069 by the current SOTA (VPD). And on KITTI dataset, achieving SqRel error of 0.139 (2% improvement) compared to 0.142 by the current SOTA (GEDepth). For zero shot transfer with a model trained on NYU Depth v2, we report mean relative improvement of (20%, 23%, 81%, 25%) over NeWCRF on (Sun-RGBD, iBims1, DIODE, HyperSim) datasets, compared to (16%, 18%, 45%, 9%) by ZoEDepth.

Demo ZeroShot Performance on Video

State-of-the-art results on Monocular Depth Estimation

Our model achieves state-of-the-art results on the indoor dataset NYUv2 and outdoor dataset KITTI for metric depth estimation using monocular images.

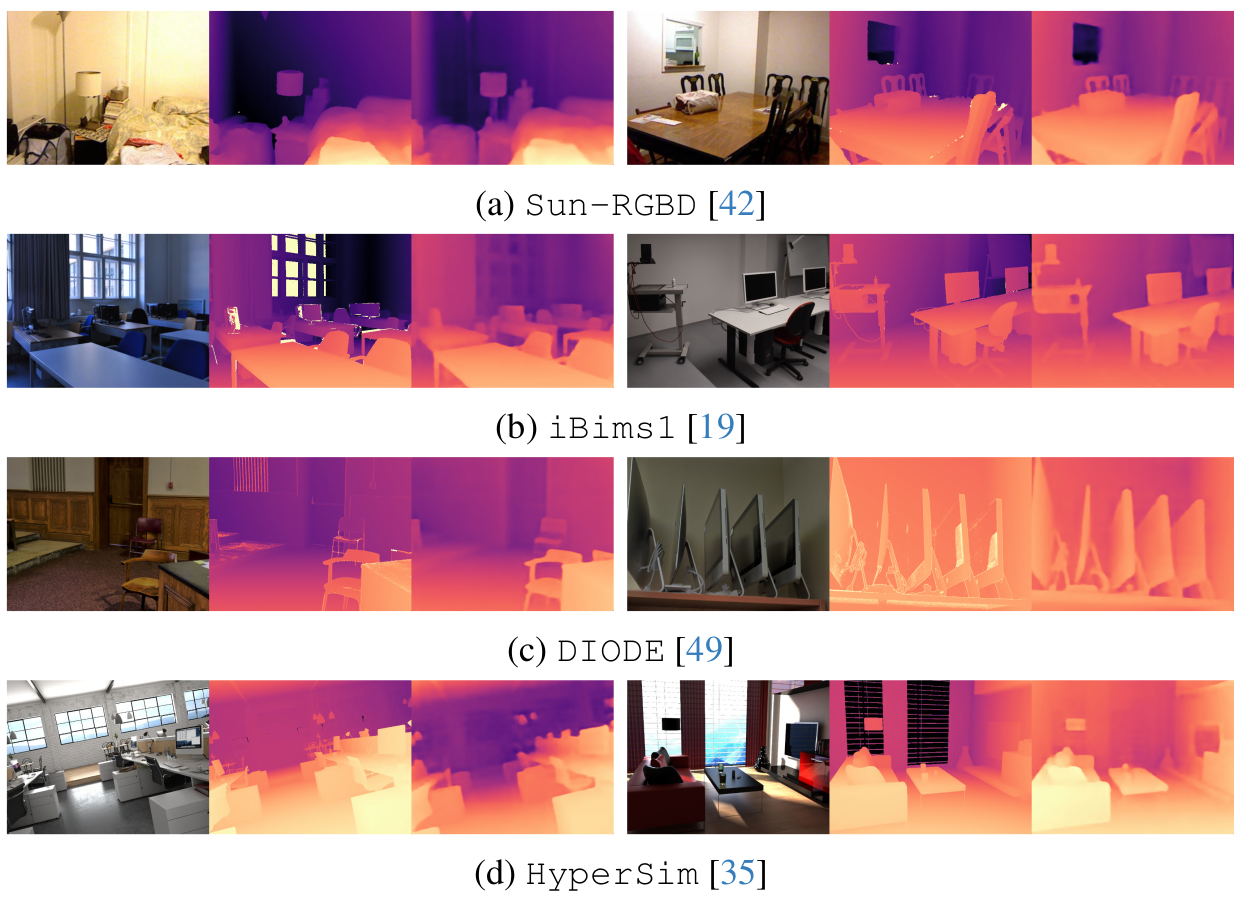

Generalization and Zero Shot Transfer

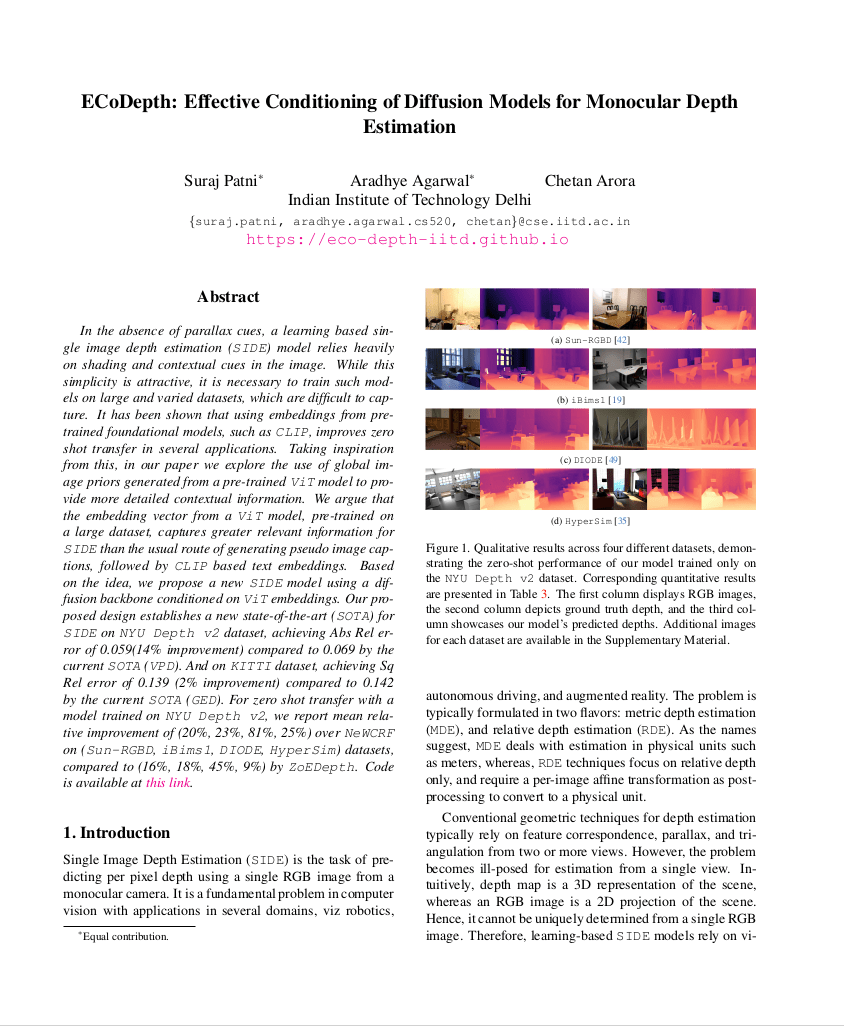

Our model generalizes well to other datasets and achieves state-of-the-art results for zero-shot transfer on various datasets. Our model which is trained only on NYUv2 dataset is tested on the following datasets: Sun-RGBD, iBims1, DIODE, and HyperSim. Achieving SOTA results compared to all previous methods that claim to generalize on unseen data(ex: ZoeDepth).

Zero-Shot Qualitative Results

BibTeX (Citation)

@InProceedings{Patni_2024_CVPR,

author = {Patni, Suraj and Agarwal, Aradhye and Arora, Chetan},

title = {ECoDepth: Effective Conditioning of Diffusion Models for Monocular Depth Estimation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {28285-28295}

}